What this is

A Python-based log parser and weather enricher. It demonstrates how to combine multiple Python libraries in a single, cohesive workflow: CLI argument parsing, logging, CSV processing with pandas, live API integration, defensive error handling, and automated exporting/archiving.

Purpose: skills practice and demonstration. This is a conceptual exercise to learn, integrate, and ship a clean, original script

Below is a demonstration of the script in action.

Tech stack

- Python (3.x)

- pandas – CSV parsing & data enrichment

- requests – Weather API calls

- python-dotenv – load

WEATHER_API_KEYfrom.env - argparse – CLI interface

- logging – structured logs:

main.log,errors.log,export.log - zipfile – package outputs

- Standard libs:

os,sys,json,time,datetime,pathlib

What the script does

- Accepts CLI input

python3 main.py logs.csv "Tokyo" --units Ccsv: path to input log filecity: city for live weather lookup--units:CorF(defaultF)

- Reads logs & filters only

CRITICALrows with pandas. - Pulls live weather (current temp + condition) from a public API using an API key loaded from

.env. - Enriches each

CRITICALentry with the weather fields. - Exports the result as timestamped

CSVandJSON, then zips both into a single archive.

Example outputs:critical_with_weather_YYYYMMDD_HHMM.csvcritical_with_weather_YYYYMMDD_HHMM.jsoncritical_with_weather_YYYYMMDD_HHMM.zip

- Logs the lifecycle (start/end, API attempts, errors, export/zip steps) to dedicated log files.

Development log (Parts 1–3)

Part 1 — Argument parsing & logging foundation

Goal: My goal for part 1 was to establish a solid base so the script accepts user input and reports progress/errors consistently. Here’s what i did

- Implemented positional CLI args (

csv,city) and optional--units. - Validated input file exists; fail fast with a clear message.

- Built a split logging system:

main.log– info/progresserrors.log– warnings/errorsexport.log– export/packaging events (set up here for later phases)

- Logged Script start/end for clear lifecycle markers.

- Note: I implemented this on my own first, then used AI for polish and troubleshooting (e.g., avoiding multiple

basicConfig()pitfalls and separating concerns with dedicated loggers).

Part 2 — Resilience + enrichment

Focus: Make the script resilient and enrich the filtered data with live weather info.

- Constructed the Weather API URL using the CLI city and

WEATHER_API_KEYfrom.env. - Added retry logic with a short delay for transient request failures.

- Robust exception handling for:

- missing/invalid CSV

- empty CSV or bad format

- request failures/timeouts

- invalid JSON or missing keys

- Filtered

CRITICALrows via pandas; handled “no CRITICAL rows” gracefully. - Enriched the filtered DF with

weather_location,weather_temp,weather_unit,weather_condition.- Used

.copy()before enrichment to avoidSettingWithCopyWarning.

- Used

- Exported to CSV and JSON (initially with static filenames).

Parts 3 Export polish & packaging

Focus: Professionalize the export flow and user experience.

- Timestamped filenames (

YYYYMMDD_HHMM) for CSV/JSON to avoid overwrites and aid traceability. - Zipped exports into a single archive for clean delivery.

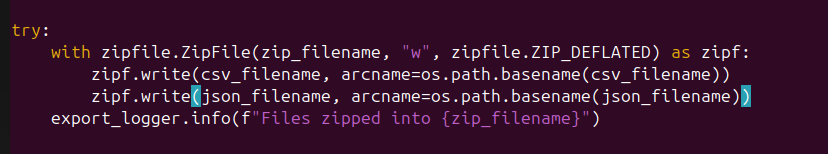

- Used

arcname=os.path.basename(...)inzipf.write()so the ZIP contains just file names, not host paths. - Prompted the user (yes/no/quit) whether to delete the original CSV/JSON after zipping.

- Expanded export lifecycle logging (file creation, zip success, cleanup).

- Ensured clean terminal output and avoided pandas warnings by working on a copied DF.

Troubleshooting & decisions

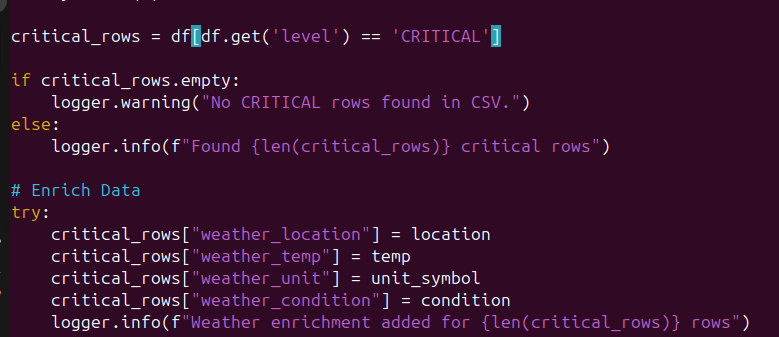

1) pandas filter/enrich bug

- Original attempt (buggy):

critical_rows = df[df.get('level') == 'CRITICAL']df.get('level')here is wrong usage; and modifying a filtered view later can triggerSettingWithCopyWarning.

- Final fix:

critical_rows = df[df['level'] == 'CRITICAL'].copy()- Use column selection via

df['level']and create a copy before mutation.

- Use column selection via

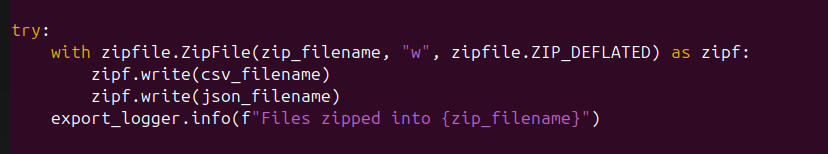

2) ZIP contents & “zip slip” learning

- Original zipping:

- This can store full/relative paths as-is.

- Reviewed and improved:

- I reviewed this choice and learned about zip-slip (a path traversal vulnerability during extraction).

- My script only creates archives, it doesn’t extract them, so it’s not directly vulnerable.

- Still, using

arcname=os.path.basename(...)is a no-downside best practice that keeps archives clean and predictable.

Code walkthroughs (with line-by-line explanations)

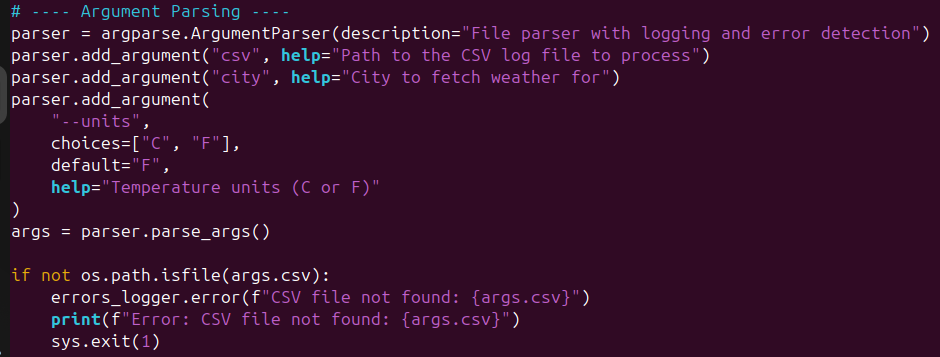

Walkthrough A — CLI parsing & early validation

Line by line

ArgumentParser(...): creates a CLI interface with a helpful description.add_argument("csv"): required positional argument for the input CSV path.add_argument("city"): required positional argument for the target city.add_argument("--units", choices=["C","F"], default="F"): optional flag constrained toC/F.args = parser.parse_args(): parses actual CLI values intoargs.if not os.path.isfile(args.csv): fail fast if the file doesn’t exist.errors_logger.error(...): record the problem inerrors.log.print(...)+sys.exit(1): inform user and exit non-zero.logger.info(...): confirm the CSV is found inmain.log.

Why this matters

- Clear contracts for how to run the script.

- Early, explicit validation and structured logging improve UX and debuggability.

Walkthrough B — Weather API request with retry + parsing

url = f"https://api.weatherapi.com/v1/current.json?q={args.city}&key={API_KEY}"

for attempt in range(3):

try:

response = requests.get(url, timeout=5)

if response.status_code == 200:

break

else:

logging.warning(f"Attempt {attempt+1}: API returned {response.status_code}")

except requests.exceptions.RequestException as e:

logging.warning(f"Attempt {attempt+1}: {e}")

time.sleep(2)

else:

print("All attempts failed.")

errors_logger.error("All API attempts failed.")

sys.exit(1)

data = response.json()

location = f"{data['location']['name']}, {data['location']['country']}"

temp, unit_symbol = (

(data["current"]["temp_f"], "°F") if args.units == "F" else (data["current"]["temp_c"], "°C")

)

condition = data["current"]["condition"]["text"]

Line by line

- Build

urlusing user-providedcityand secretAPI_KEY. for attempt in range(3): bounded retry loop.requests.get(..., timeout=5): prevent hanging; fail fast.if response.status_code == 200: break: success path exits the loop.logging.warning(...): log non-200s and network exceptions.time.sleep(2): small backoff between attempts.else:on thefor: runs only if loop neverbreaks; we bail with log + exit.response.json(): parse JSON payload (exception-handled in the full script).- Extract

location, choose temp by unit, and getcondition.

Why this matters

- Solid network hygiene (timeouts, bounded retries).

- Clean separation of transport errors vs data parsing.

- Reliable behavior under flaky networks.

Walkthrough C — Filter & enrich safely with pandas

df = pd.read_csv(args.csv)

critical_rows = df[df['level'] == 'CRITICAL'].copy()

critical_rows.loc[:, "weather_location"] = location

critical_rows.loc[:, "weather_temp"] = temp

critical_rows.loc[:, "weather_unit"] = unit_symbol

critical_rows.loc[:, "weather_condition"] = condition

Line by line

pd.read_csv(...): load the input logs into a DataFrame.df['level'] == 'CRITICAL': boolean mask for the target rows..copy(): create a new, writable DataFrame to avoid view/assignment issues..loc[:, "weather_location"] = ...: column-wise assignment (explicit, clear).- Repeat for

weather_temp,weather_unit,weather_condition.

Why this matters

- Demonstrates correct pandas filtering and mutation without

SettingWithCopyWarning. - Keeps the original

dfuntouched while producing a clean enriched subset.

Reflection:

Compared to my Nmap Dashboard project, I used AI less here:

- I wrote most of the code myself, then used AI for polish, debugging, and second opinions.

- When AI suggested alternatives, I researched and decided deliberately (e.g.,

.copy()to avoid chained assignment issues,arcnamefor ZIP hygiene). - The process helped me go a layer deeper into Python and pandas; I can now read and explain everything I wrote and most AI suggestions I kept.

How to run

python3 -m venv venv

source venv/bin/activate

pip install -r requirements.txt

# create .env with your API key

echo 'WEATHER_API_KEY=your_api_key_here' > .env

# run the script

python3 main.py logs.csv "Tokyo" --units C

Outputs

critical_with_weather_YYYYMMDD_HHMM.csvcritical_with_weather_YYYYMMDD_HHMM.jsoncritical_with_weather_YYYYMMDD_HHMM.zip

TRY IT OUT AT https://github.com/yairemartinez/Log-Parser-Weather-Enricher

Closing Summary

Working through this capstone gave me confidence in handling end-to-end Python scripting projects from parsing input to enriching data, handling errors, exporting results, and securing the workflow. While the script itself is conceptual, the process taught me transferable skills: how to think about data pipelines, build resilient code, and apply security-minded decision making.

THANKS FOR READING. 🙂